Notes on using ChatGPT for software work

2026-02-11 09:06 by Ian

#define

- "Gestalt": According to Wikipedia. Here, I use it to mean any input that cannot be broken down into subunits without losing its meaning. Images are the canonical example, but graphs and diagrams are also examples.

- "Linear": Input that can be understood by summing a sequence of atoms (or tokens). Text is a canonical example, but may also include speech.

Things AI is good at

Searching through mountains of documentation

I am a frequent user of Espressif's IDF (integrated Development Framework). There are several versions in concurrent use, and breaking changes are introduced regularly. The documentation is excellent, but it is easy to find yourself reading the wrong version's doc.

I also find it useful as an NLP front-end for searching through academic journals. It can read PDFs, but may as well be blind to any gestalt they might contain.

Writing code from templates

Here is a link to a ChatGPT session where I have it write state machine code from a template. It did a fairly decent job of this, but I would never trust it. After I finished auditing and re-working it, the code that it produced from that session held up pretty well under multi-threaded concurrency tests, and is even decently readable. I've had numerous such sessions with ChatGPT, and it produces work-saving results more often than not.

Writing well-scoped implementations of commodity algorithms

There are hundreds of examples of Perlin noise generators on the public internet. I was facing decision paralysis over it, so had the robot write this function (and a few others). Then I evaluated it, and marked off a few TODO points which were at the crux of my indecision. This unblocked another effort, and lowered the scope of the choices I have yet to make. It only took an hour to do this, and now I can use a class I've been wanting for years.

Similarly, I've been using ChatGPT to fill out stub functions elsewhere in my code. It has caught a few subtle bugs in the process, and occasionally teaches me something new. If I have learned anything in the past year about how to work effectively with the robots, it is this: The more tightly-scoped the task, and the more effort you put into the original request, the more likely that what you get back will save (rather than cost) time. This is a bit of a hard-habit to break after decades of working with human programmers, who usually operate better with small snatches of conversation. The robots don't have our short-term memory limitations, and can handle monoliths of text much more easily.

Things AI should be good at (but isn't).

Porting complicated code from the top-level

Case-in-point: this session is me trying to port one of my PHP programs into C++. I eventually gave up on this. I blame myself to a non-zero extent for the failure. I left too much to invention for the robot to cope with effectively, and it produced unworkable slop.

In retrospect, I would have had better results by taking the task apart into tightly-scoped, well-contained subtasks, rather than asking for everything all at once.

Writing components under complicated private frameworks

I originally tried to use ChatGPT to write this driver, but it turned out to only be useful for extracting tables of data and writing boilerplate (like enums). It seems that ChatGPT has been reading a large amount of Arduino code, and when asked for drivers, it tends to stick in that milieu (including assuming I/O calls are blocking). Asking for asynchronous structure only helped matters marginally, since it couldn't read the datasheet and infer timing constraints of the part it was trying to use.

On a related note, asking it to implement platform shims for new microcontrollers wasn't productive, but pretended to be. Rather than implementing register access directly (as instructed), it would use some other developer's platform API instead. ChatGPT doesn't appear to be able to respect access constraints for hardware registers with any of the three microcontrollers I have tried.

Reading schematic diagrams and diagramming state machines with mermaid

Humans have a brain structure that allows for effortless cross-synthesis of linear and gestalt knowledge. The robots do not. If I ask a junior embedded engineer to read a schematic and write firmware to control it, I have no doubts about their ability to do it. Here is my session full of fail for anyone who wants to examine it.

Of all of the things I wish AI could do reliably, it is this. Building finite state diagrams is tedious and easy, and it would save me many hours each week if I could offload it onto a machine.

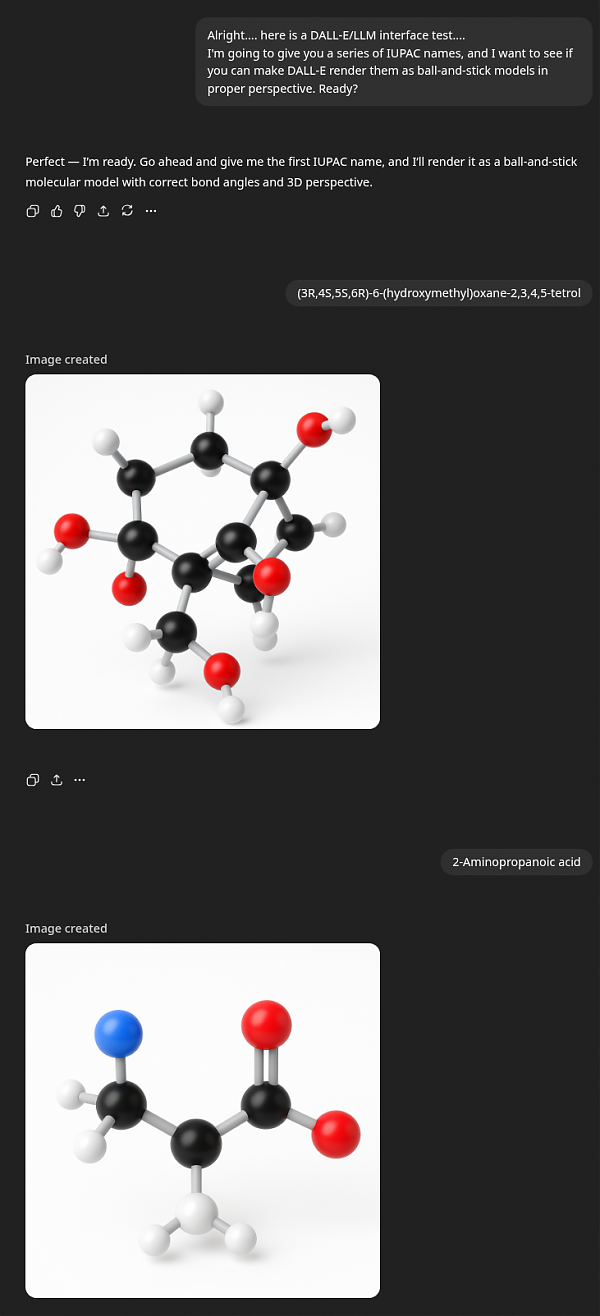

IUPAC

One of the most difficult things to master in organic chemistry is IUPAC naming.

For the benefit of readers who are not chemists, the molecules that ChatGPT drew are nonsense. Nothing about them is plausible. Wrong atom counts, wrong bond counts. Wrong bond angles.

Summation of my current opinion of using AI to write software

- LLMs are state-of-the-art expert systems. They can teach you things, but they cannot alleviate you of the burden of knowledge. But if that isn't obvious to you already, using AI is probably going to stifle your intellectual development (which it might easily do).

- It certainly isn't going to replace software engineers.

- It probably will reduce the workload of software authorship for people who are already good at the craft.

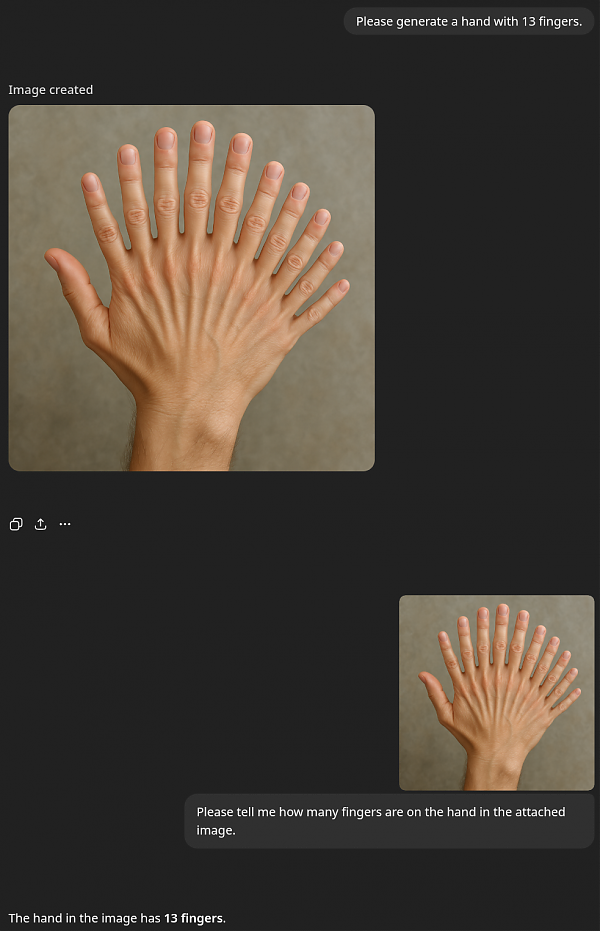

AFAICT, the deficits in ChatGPT (especially related to hardware engineering) are mostly a consequence of the architectural limitations of having two separate models for linear and gestalt (DALL-E). Case in point:

Most readers will reject the following statement, but it is absolutely true: Your brain can't count.

It can only subitize.

Subitizing is possible because low-value natural numbers come associated with distinct visual symmetries. But once the number gets above about 7, most neural networks (including brains) will no longer accurately subitize, and must instead count. And counting is an act of a mind, requiring education.

I'll probably keep paying for ChatGPT. Because even if I am not optimistic about its future as an engineer or chemist, it is at least a competent poet.

Previous: Musings and definitions

Next: