2024.08.03: My thoughts on testing and doc

2024-08-02 23:25 by Ian

Here are some of my favorite pieces of software doc that I've done to support my creations. Testing is wrapped up with this post, because they typically go together. Their purpose is either reduce (doc) or trap (testing) human error. And for better or worse, they are not strictly required for software to do its job.

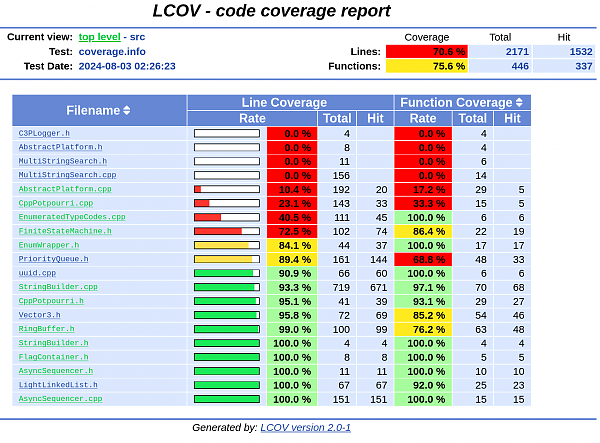

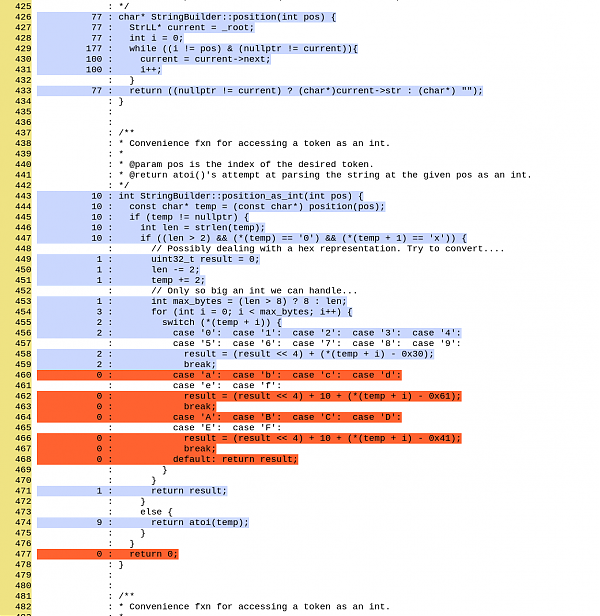

Coverage reports and Doxygen

These are my bare-minimum documentation products on every piece of complicated software I write. Coverage report summaries look like this. You can dive down into specific source files, and see execution counts on all lines.

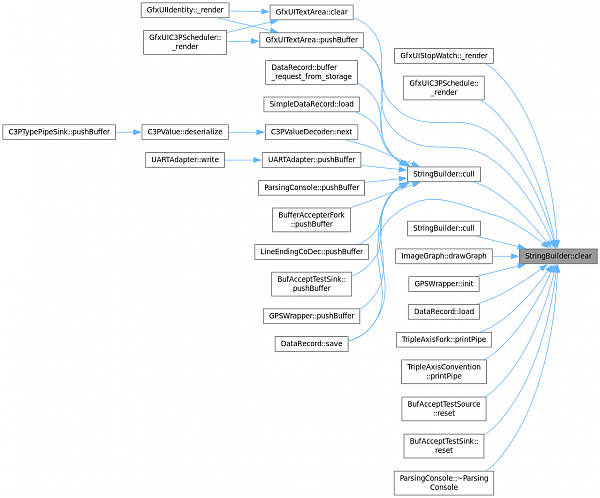

The doxygen output can be of varying levels of quality, according to tastes. But doxygen can be bent into such a shape that you can generate easy-to-navigate call graphs and class relationships, with no investment in commentary in a code base. These things are invaluable for anyone trying to understand a piece of software with thousands of functions.

If I am specifying the pipelines, those two bodies of output would get stored alongside build artifacts for an authenticated CI pipeline that matched it exactly. Complete with audit logs held by the IT department.

If unit tests don't pass, Versioning, Doc, Build, and Release stages of a pipeline will not proceed.

Thus, binary release is not possible unless testing passes and documentation is available for it first. Before anyone can install it and have a problem.

Non-engineers may not appreciate just how much time this saves everyone. But in my experience, it lets everyone isolate problems in their own parts of the code base from problems caused by others working elsewhere in the same code base. It also prevents people outside of the software devs from suffering preventable bugs which then must be discovered and reported by users, which then become support tickets, issues, meetings, and debugging efforts. Which then becomes a patch and a release that shouldn't have needed to happen.

Anecdote: NTDI

But as I painfully learned recently, it takes team commitment, and management needs to care about reliability enough to allocate the manpower to set it up in the first place.

My favorite team in this regard was at Neustar. None of them needed to be convinced that the test coverage was a fundamental thing both to know, and to work towards improving whenever possible. We had one man on the team who was designated as the TestProctor due to both conscientiousness and neutrality (he worked on UI stuff, and the testing was for the back-end). He was responsible for writing the testing policies, and generally running the framework. He also helped Josh and I write the TypeScript mocks to simulate real hardware. Only with his buy-in could Josh and I set the most authoritarian commit rule I've ever endorsed in a code base:

If test coverage falls (either in terms of absolute count, or ratio) since the last version, your commit to the master branch will be rejected by the CI system.

I've never had more confidence in the integrity of a piece of software I'd helped write as I did for the NTDI constellation of repos, and the functionally patterned Java-8 version that we wrote following that. Finding bugs became trivially easy, and usually, none of us even saw each other's bugs, because it was rare that someone did something so wrong that it didn't get caught by at least one of the tests that we all agreed to force ourselves to write. Merges to master became brief and targeted, and new features entered the code base in a state of completion and readiness (or completely commented and inactive until arguments surrounding them were settled).

If any alumnus of the Neustar IoT dept ever reads this, this was my favorite thing about working with you guys. Everyone knew the value of the fundamentals, and we were all there long enough to see it pay off.

SV Dialplan

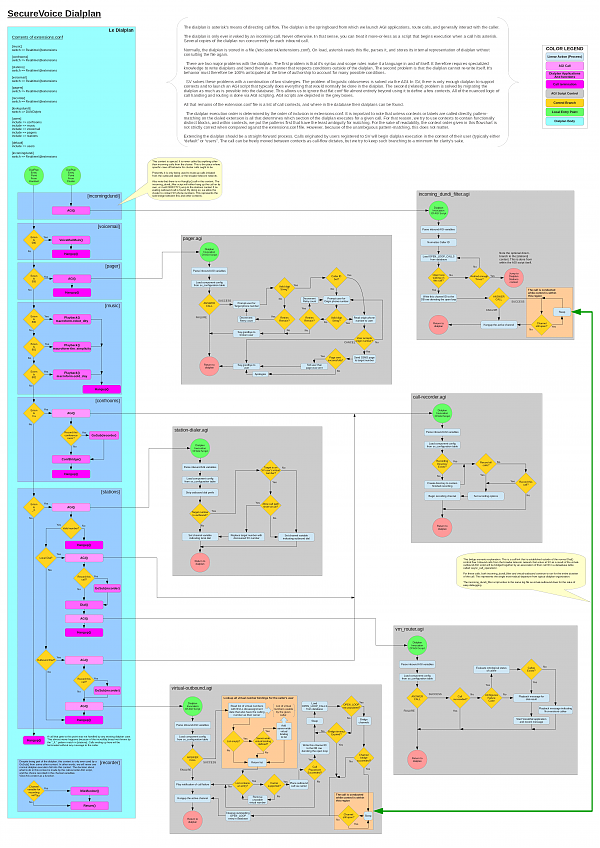

For those who don't know, a "dialplan" is a program for routing phone calls. They are at the foundation of probably every phone call you've ever had with a computer.

There might be some form of documentation that is specific to dialplans. But if there is, I don't know about it. All of the dialplans I've read had any commentary inline with the code, just as you'd expect for any other kind of program. But the dialplan wasn't the only piece of the callflow, and I didn't want the next engineer to need to jump between doc in four separate locations (and three separate languages) to understand why their call was misbehaving.

So I wrote this giant annotated flow-chart in OpenOffice Draw over the course of a week once the product was nearly complete. We printed it on posters and hung them in the engineering area. I still hang it on my office wall at every job since.

My protégé for the product was a good choice on CellTrust's part. He picked up everything (literally everything) about the project and became its lead and owner. Not an easy task, considering that he was new to asterisk when he was hired. By the time I left, he was writing his own additions to the architecture in perl (most of my AGI scripting was done in PHP), and fixing bugs in the FIPS-compliant C++ cryptographic daemon that I had missed.

Carlos, if you ever read this, I really enjoyed working with you and I hope you're doing well. I left my work in good hands.

The anti-pattern

My worst experiences on software teams have been in cases where (not surprisingly), there are no tests, no doc, and no one but me cares. I was at company that will remain nameless for five years, and I'm fairly certain that the 10,000 lines of unit tests that I wrote for them on weekends and late nights were the only unit tests the company had ever seen in its multi-decade existence. I was the sole contributor to automated testing. As far as I can tell, none of the other engineers ever ran the unit tests prior to committing. They would wait until my CI pipeline logic caught it on accident with my existing tests. And then they'd ignore the failure reports and release firmware anyway.

With no doc.

The release process consisted of shovelling the firmware over to a mortal man to run repetitive integration tests by hand. There was zero automation for hardware testing of firmware releases.

By the middle of 2022, I was ready to turn on a hardware CI/CD pipeline that would perform complex testing of firmware builds automatically (and on real hardware) upon merge into master. It would have allowed their lone test engineer to cover at least 10x the test area with less work.

They didn't want it. And I had no authority (product or org-chart derived) to help matters.

But ignoring my advice on best-practices for a year didn't stop them from blaming my choices for their buggy builds and missed deadlines.

You guys know best, I suppose.

Previous: 2024.05.31: Faster is not always better

Next: Smol Things